Pair Search

Pair Search

Try it out the prototype here today!

The bigger picture - Search for Humans and Machines alike

A good decision is an informed one. Policy officers and a civic society need information to make decisions and learn about areas of public interest, improving search will help move us closer to this goal.

But good search isn’t limited to just human usage. Advances in Large Language Models have led to a proliferation of data-augmented LLM generations, or more commonly known as Retrieval Augmented Generation (RAG). The heart of a good RAG system is a good search engine to retrieve the relevant data chunks for ingestion. Figuring out the inside-outs of how to build a good search engine is essential to not just our work on the Pair suite of products, but potentially helpful to other LLM products in government as well.

Hansard

Every word that is said in Parliament is recorded and published as the Official Report of Parliamentary debates or “Hansard”. These reports can be found as far back as 1955, when Parliament was known as the Legislative Assembly. This treasure trove of information is used by policy makers, professionals and members of the public alike.

However searching for relevant Reports is currently difficult and unintuitive. Results are poor due to being 100% keyword search based, meaning that the documents that frequently mention a single word in a query are often ranked more highly, when they should be taking the entire search phrase into account.

For instance, look at this search for “covid 19 rapid testing” in the current Hansard search engine :

Due to how frequently Covid is mentioned in Parliamentary debates, the actual results are flooded with reports that are only tangentially related to the query, if at all. Additionally, only the titles are presented without any smart text snippets to help a user confirm whether a link is likely to be useful. Compare that to the results from searching the same query in Pair Search:

Search smarter, not harder

Current public and enterprise search experiences are generally low quality, expensive and slow. We believe that this does not have to be the case. Information should and can be readily accessible at everyone’s fingertips.

Pair Search was prototyped during OGP’s Hack for Public Good 2024 Hackathon, under the guiding principles of proving search that should be:

-

State of the Art (SOTA) - Search should leverage on all the recent advances in search technology

-

Fast - The complexity of the search algorithms should no impact the latency of a search

-

Simple, Intuitive and Effective

-

Usage of the app should be intuitive

-

Information should be immediately presented in as useful a format as possible, to aid users in deciding which results are worth further investigating

-

The site is currently live, and indexed on the full Hansard Database from 1955 - January 2024, spanning over 30,000 reports. Already we are seeing dramatic improvements in search results using Pair Search.

Search that actually works

Pair Search leverages Vespa.ai, a highly versatile and scalable open-source big data serving engine, to offer state-of-the-art search capabilities. We leverage on their extensive text-search capabilities, as well as continuous integration of state of the art techniques and models (e.g. the e5/m3 models and ColbertV2 reranking)

*Note, the above video showcases a "Magic Summary" feature that automatically generates a summary of the best results in a chronological timeline. However, we are rolling Pair Search out without it first to focus on the core search experience.

The Data

We undertook the challenging task of scraping and parsing the extensive Hansard database. This site contains over 30,000 reports starting from 1955. Given the evolving data formats over the decades, standardizing this diverse information for search purposes presented significant hurdles. Nonetheless, these were successfully navigated to create a uniform format conducive to efficient search operations.

The Engine

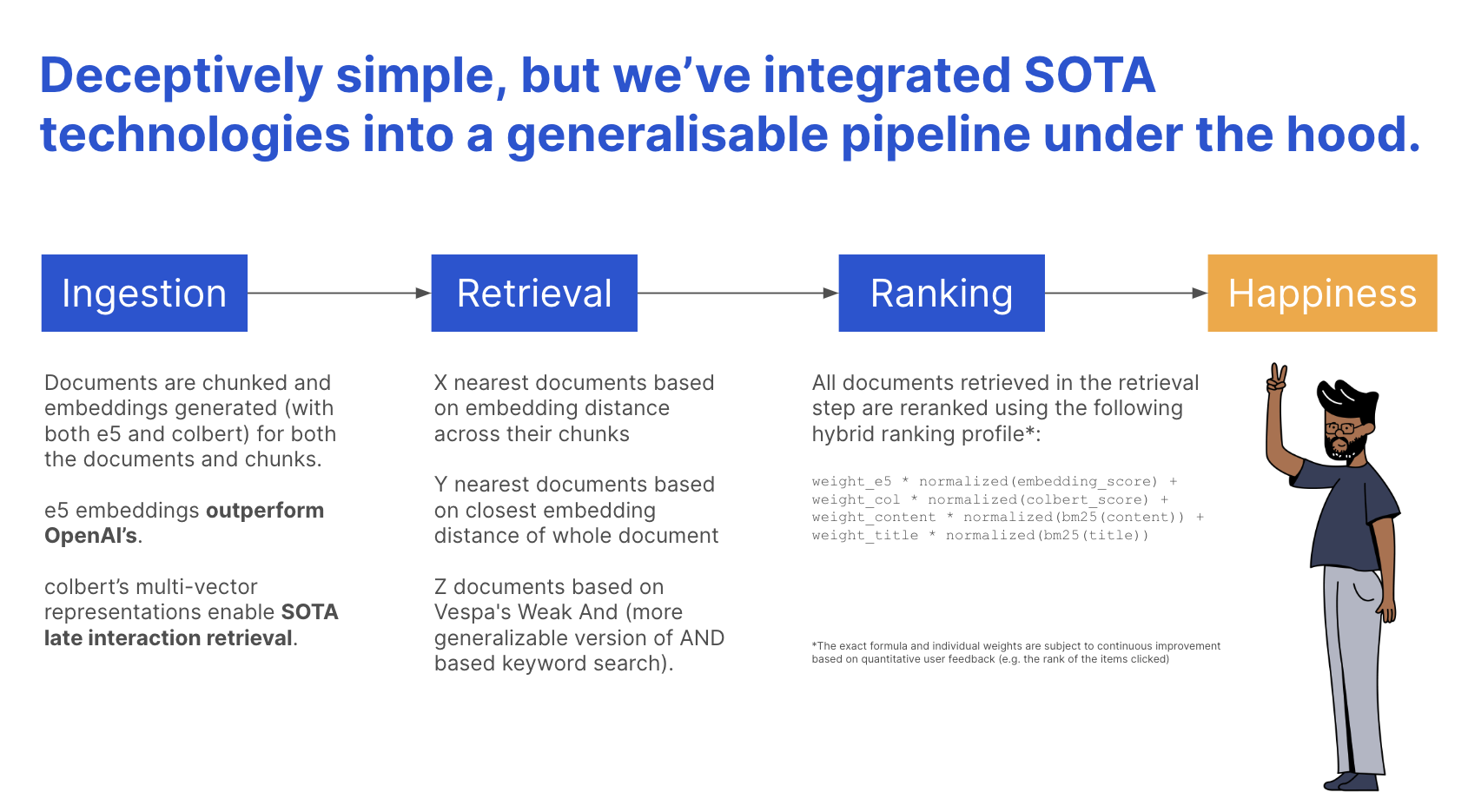

The search process in Pair Search is meticulously divided into three phases:

-

Document-Processing:

This initial phase involved the challenging task of scraping and parsing the extensive Hansard database, which contains records starting from 1955. Given the evolving data formats over the decades, standardizing this diverse information for search purposes presented significant hurdles. Nonetheless, these were successfully navigated to create a uniform format conducive to efficient search operations.

-

Retrieval:

The retrieval mechanism combines keyword-based and semantic search strategies. The keyword-based search utilizes Vespa's weakAnd operator alongside nativeRank and BM25 text matching algorithms to efficiently sift through vast amounts of data.

On the semantic front, Pair Search incorporates e5 embeddings, which offer a balance of speed, cost-effectiveness, and enhanced performance compared to alternatives like OpenAI's ada embeddings model.

This dual approach ensures a robust and nuanced search process that captures both the intent behind user queries as well as the actual textual content.

-

Re-ranking:

To keep results speedy despite our usage of complex re-ranking algorithms, we utilize a three phase approach to iteratively refine the search results.

Phase 1: each content node employs cost-effective algorithms to narrow down the initial set of results.

Phase 2: a more resource-intensive re-ranking is performed using the ColbertV2 model, ensuring that the most relevant documents are prioritized.

Phase 3 (global phase): The final phase amalgamates the top results from all content nodes, creating a hybrid score that integrates semantic, keyword-based, and ColbertV2 scores. Utilizing a hybrid score gives us significantly better performance than relying on just one metric, which tend to be overly biased towards one dimension of result quality

What's happening

We have currently soft-launched the Pair Search (Hansard) prototype with AGC, and a selection of users who frequently utilize Hansard Search, including Ministry of Law legal policy officers, Comm Ops officers at MCI and PMO, COS coordinators among others. We are averaging ~150 daily users and ~200 daily searches in the early days of our soft launch, and initial user feedback has been positive:

"This will improve productivity by a few folds. Hansard search was the most painful thing previously" - MCI Policy Officer

"Wah useful. Returns results much faster than the current one" - MHA Policy Officer #1

"Wow this is really impressive!" - MHA Policer Officer #2

The product was also mentioned in Parliament:

"The Hansard is an open book. We can all refer to it readily, and soon, we will be able to do a generative AI search on it." - Prime Minister Lee Hsien Loong

We have also seen interest from multiple agencies as well looking to leverage our core Search Engine on their own data.

What's next

Expanding our Data Sources

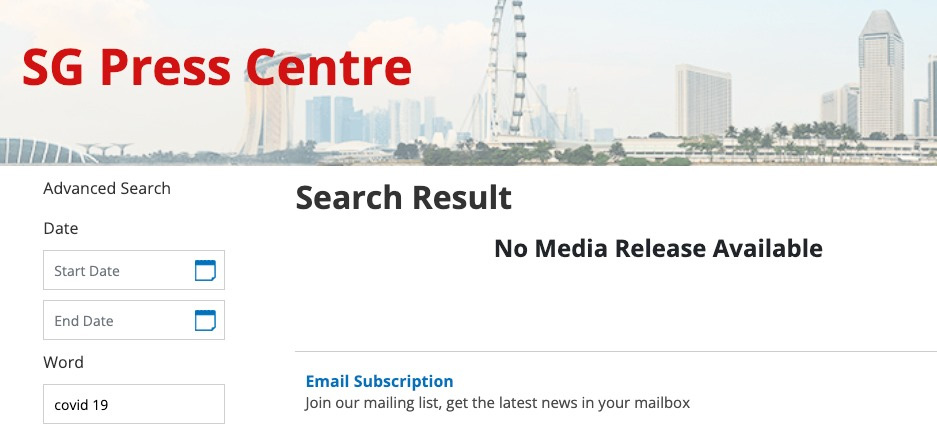

Hansard is not the only useful public corpus of data with a less-than-ideal search experience. For instance, even the search for a simple query such as "Covid 19" on the SG Press Centre page yields zero results (you have to search for "covid-19" with a dash for it to return some documents.

One of our immediate goals is hence to expand the number of data corpuses we make available for search. We are currently in the midst of working with Judiciary to incorporate the case judgements from the High Court and Court of Appeal (to go hand-in-hand with the current Hansard dataset for any legal research use-cases).

Going from Search to Recommendations

The next goal for our search engine is to fully leverage the search index we've built for not just direct queries, but also for enhancing discovery and recommendations. By analyzing search patterns, user interactions, and content relationships within our index, we can proactively surface relevant content and personalized suggestions.

This approach will transform the search experience from being purely query-driven to becoming a dynamic platform for content discovery, tailored to meet individual user needs and preferences, thereby enriching user engagement and satisfaction.

Increasing the Search Performance

We also constantly aim to better our search performance, which we chart through metrics such as the average rank of results that are clicked, or the number of pages a user has to go through before finding results they want. These metrics can be used to tune our hybrid algorithm and/or weights to improve both the accuracy and relevance of the results generated.

Another thing we are exploring now is the integration of LLMs to augment the search performance via i) enriching the search index, via automated tagging and potential-question generation and ii) enhancing the retrieval / ranking process through query expansion (appending additional related terms and phrases to a query to increase the chance of retrieving a relevant hit)

Broadening the Applications of Our Search Engine

As a search engine, we’ve designed it to work out of the box as the retrieval stack for a Retrieval Augmented Generation system as well, such as the one we have been trialing with the Assistants feature in Pair Chat. By providing an API to both the base search functionality and the RAG retrieval functionality, we hope to have our base engine serving multiple applications and use-cases.

If you're currently using a search that doesn't quite work, or are interested in collaborating, reach out to us here!

Meet the team

The prototype was developed by the following team.